Going Berserq with generative AI for promptography

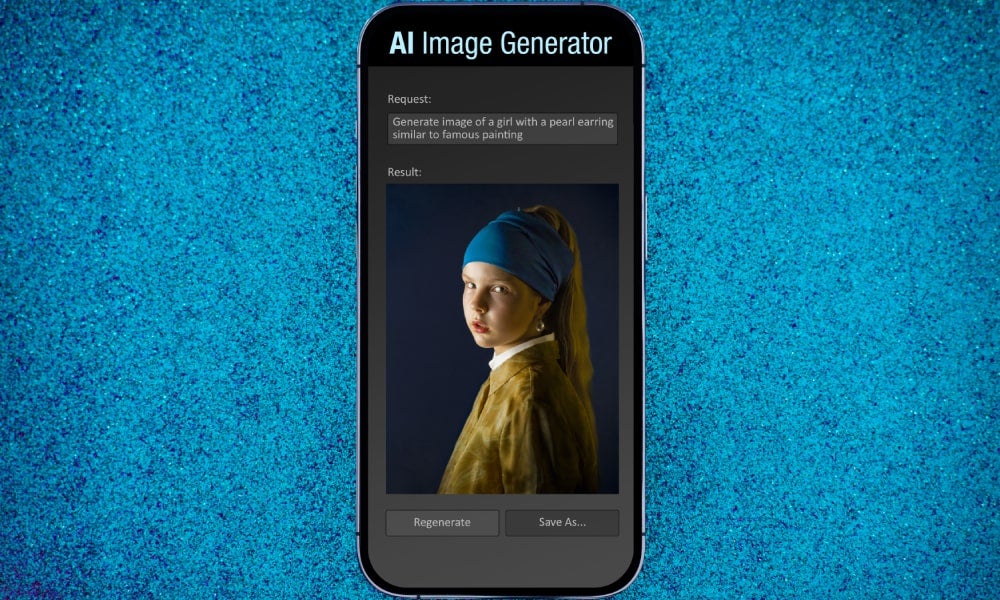

Advanced AI models like the ones developed by Berserq are disrupting the stock photo industry by generating realistic images based on a few descriptive words

While AI technologies like ChatGPT and Google Bard gain attention, less visible but impactful advances in generative AI models are quietly revolutionising businesses and disrupting traditional models, especially in visual, music, and audiovisual applications. The implications of AI-powered bespoke image creation for advertising and marketing businesses, both current and future, are of great importance.

During a recent interview at UNSW Business School, James Wan, Co-Founder at Berserq, and former Head of IP and Privacy at Harrison.ai, discussed the revolutionary potential of their generative AI application in transforming the production of “photographic” images. Talking to UNSW Business School Professor of Practice Peter Leonard, Mr Wan spoke about how AI is already reshaping business processes and what lies ahead.

How do generative AI systems work?

Generative AI systems, such as OpenAI’s DALL-E 2, offer significant potential for businesses in today’s rapidly evolving technological landscape. They can revolutionise creative processes, allowing businesses to generate customised content at scale almost effortlessly. However, as with any transformative technology, caution and strategic foresight are essential.

According to Mr Wan, an AI Ethics Technical Committee member at the Australian Computer Society (ACS), diffusion models like OpenAI’s DALL-E are used to generate images based on a given text prompt. They do this through a step-by-step process of refining an image by de-noising. This allows the model to explore and create random, detailed and unique images.

Mr Wan explained that diffusion models rely on the contrastive language-image pre-training (CLIP) model that learns the relationship between images (i.e. pixel data) and text. Mr Wan said this model computes representations of images and text, called embeddings. CLIP is useful for tasks like recognising objects in images or describing the content of an image using words. So, while diffusion models like DALL-E focus on generating new images, CLIP focuses on understanding the connection between images and text. Both models are important in the field of generative AI, but they have different goals and ways of working.

Mr Wan explained that one strategy behind Berserq involves leveraging the advancements in generative AI, specifically the technologies of both CLIP and diffusion models. Together, these two technologies enable the conversion of text inputs into pixel outputs (and vice-versa), generating realistic and coherent images. In this way, Mr Wan explained that Berserq builds upon the work of OpenAI's DALL-E, a text-to-image model trained on a large dataset of image-caption pairs. The model used 400 million such pairs for training, with subsequent iterations like DALL-E 2 incorporating even more training data.

“The applications of generative AI, broadly, are the ability to create realistic environments that don't exist, or they do exist but are really hard or difficult to do manually, or they generate completely new things that that we haven’t ever seen before, but look kind of coherent,” explained Mr Wan.

Are AI-generated images truly original?

AI models, including those used by Berserq, learn from and are typically trained on existing datasets in digital form, such as content available on the Internet. These datasets consist of real-world images if the goal of the model is to generate similar real-world-looking images, from which the models learn patterns and can generate new images based on that knowledge, explained Mr Wan.

While AI-generated images are not direct copies of existing images, they can still be influenced by the patterns and styles present in the training data. The output of an AI model may resemble images seen in the training data in a stylistic sense, although it will likely have its own unique variations, he said.

However, the concept of "originality" becomes more complex regarding intellectual property and copyright. AI-generated images may raise questions regarding ownership and attribution. For this reason, it is incredibly important for companies using generative AI systems (and the companies building and selling their work) to consider legal and ethical implications, as well as the potential impact on creative industries and artists.

Ultimately, Mr Wan said he believes AI-generated images can be seen as a new form of creative expression (much like the advent of photography when previously there was only hand-drawn painting or artwork), but the extent of their originality and the ethical considerations surrounding them are evolving areas of discussion and exploration in the field of AI and intellectual property rights.

With a background in law, Mr Wan added that this has been useful in considering the ethical considerations of copyright and originality when it comes to the development and use of generative AI. “We need to train AI models that perform that task of creation. So we need a training data set that’s gathered from all different kinds of sources, the internet being a really good source of data, that other datasets might be proprietary, for example, and obtaining licences granted to us," he said.

“That's where the lawyer aspect comes in handy to negotiate those kinds of data licences as well. And then, we train those models. And now, with the availability of cloud computing, it's pretty feasible to train without having to buy your own equipment if you need to experiment and test whether your model is performant. And then it's also very quick to deploy and test on real data, or unseen data to see how it performs in real life. So how does it change? So when it generates something, is it a cohesive/ coherent image? What does the human think about what’s generated?”

How will generative AI disrupt the stock industry?

Generative AI, such as Berserq, offers various applications, including the creation of realistic environments that may not exist in reality or the generation of entirely new and coherent visuals. By automating the generation of synthetic media, it has the potential to disrupt industries like video game assets and advertising.

According to Mr Wan, the aim behind Berserq was to disrupt advertising, especially when it comes to the likes of stock photography giants like Getty and Shutterstock. These companies, he said, act mostly as intermediaries, and so, for the most part, have been a large reason why many photographer contributors don’t appear to be be paid fairly.

And Mr Wan said that Berserq aims to target the advertising industry as its primary audience, offering the ability to quickly and efficiently create visual assets for campaigns. By providing a tool that enables the generation of high-quality images based on textual prompts, Berserq seeks to provide a valuable solution in this space.

Companies like Shutterstock and Getty act as intermediaries between the buyers or licensees of images and the copyright owners (i.e. photographers), he said. Often, they retain a large percentage (around 70-80 per cent) of the revenue generated from these transactions. This means that out of every dollar earned, they keep 70-80 cents for themselves. Mr Wan questioned the justification for this high cut, especially considering that websites generally do not have high operating costs. In fact, over time, the percentage paid to the photographer contributors has been decreasing, which is upsetting to the photographic community.

Subscribe to BusinessThink for the latest research, analysis and insights from UNSW Business School

How does Berserq solve the problem of paying photographers more? According to Mr Wan, this platform empowers photographers through greater control by fostering direct relationships with their buyers. It does this by allowing photographers to passively monetise their unique visual styles through synthetic content generation, where the synthetic output is authenticated as a work authorised by the photographer (via a digital certificate) rather than content that is the output of misappropriation by someone with an AI model that was trained with scraped publicly available images of the photographer.

“I think there's a way to disinter-mediate the stock photo industry and probably give more back to the photographers who actually do take useful and commercially valuable photos, and then also the brands get what they want a lot faster, as well as for a better price, potentially,” said Mr Wan.